Prior to starting a historical data migration, ensure you do the following:

- Create a project on our US or EU Cloud.

- Sign up to a paid product analytics plan on the billing page (historic imports are free but this unlocks the necessary features).

- Raise an in-app support request with the Data pipelines topic detailing where you are sending events from, how, the total volume, and the speed. For example, "we are migrating 30M events from a self-hosted instance to EU Cloud using the migration scripts at 10k events per minute."

- Wait for the OK from our team before starting the migration process to ensure that it completes successfully and is not rate limited.

- Set the

historical_migrationoption totruewhen capturing events in the migration.

Migrating data from Google Analytics is a three step process:

- Setting up the Google Analytics BigQuery streaming export

- Querying Google Analytics data from BigQuery

- Converting Google Analytics event data to the PostHog schema and capturing in PostHog

Want a higher-level overview of PostHog? Check out our introduction to PostHog for Google Analytics users.

1. Setting up the Google Analytics BigQuery export

Unfortunately, Google Analytics' historical data exports are limited. The best way to get data from Google Analytics is to set up the BigQuery export.

To do this, start by creating a Google Cloud account and project. Afterwards, do the basic setup for BigQuery, including enabling the BigQuery API. The BigQuery sandbox works for this.

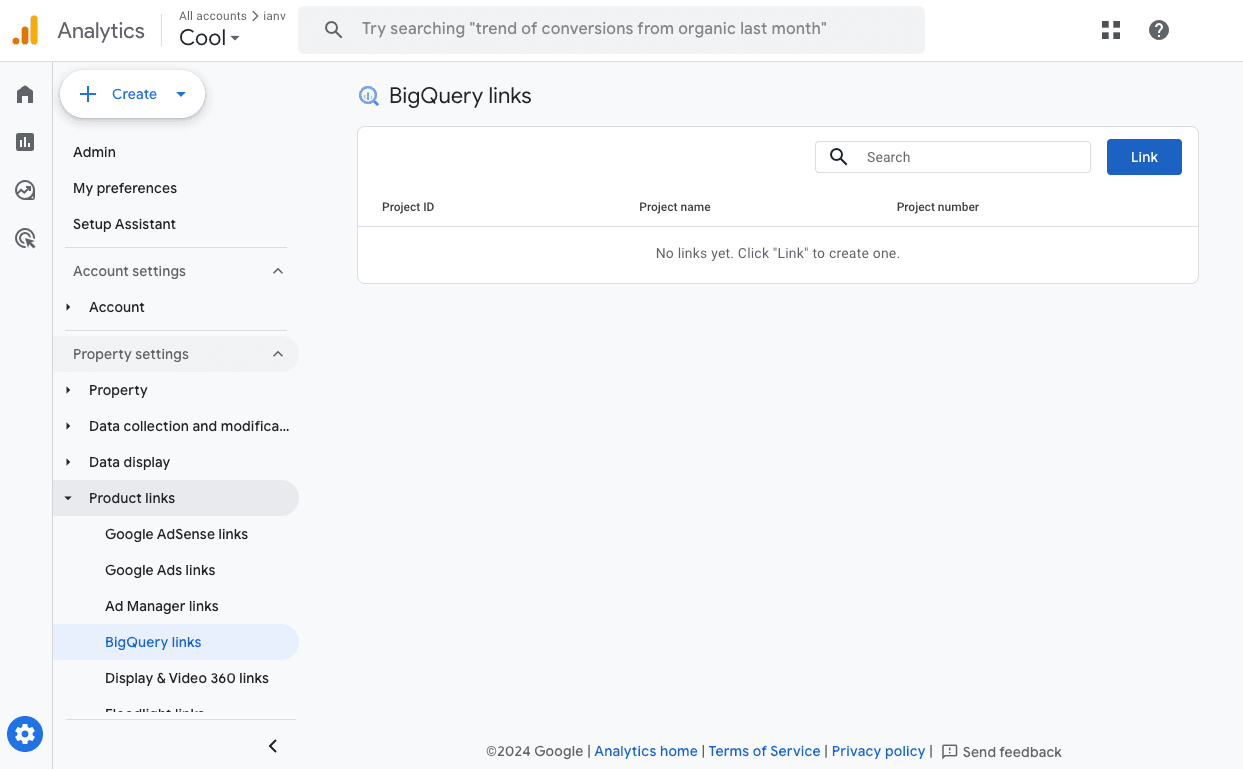

Once done, go to the Admin section of your Google Analytics account. Under Product links, select BigQuery links and then click the Link button.

Choose your project, choose your export type (you can make either daily or streaming work), click Next, and then Submit. This sets up a link to your BigQuery account, but it can take more than 24 hours to see data in BigQuery.

2. Querying Google Analytics data from BigQuery

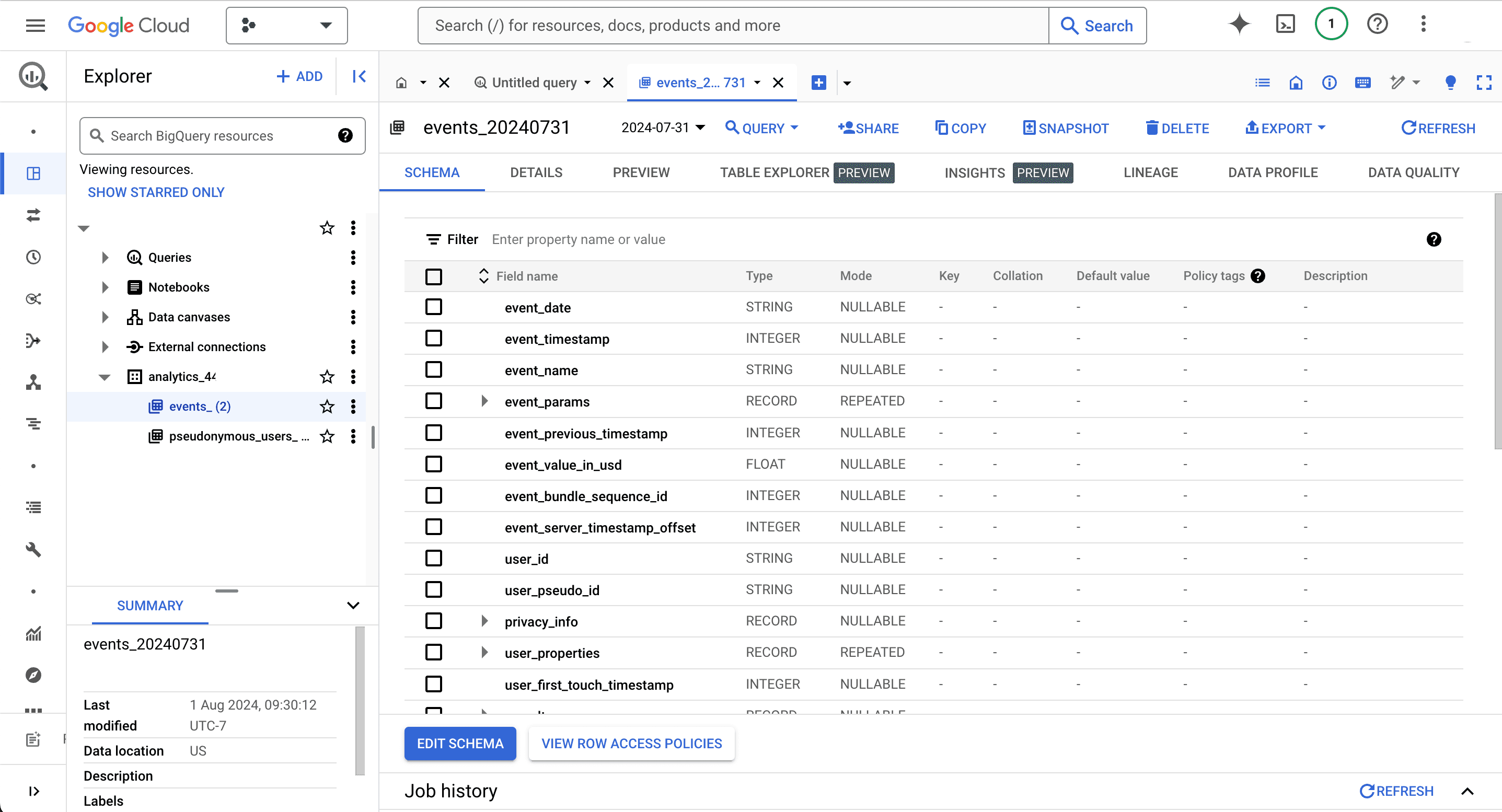

After the link is set up, data is automatically added to BigQuery under a resource with your Google Analytics property prepended by analytics_ like analytics_123456789. Within this is an events table based on your export type. If you used daily exports, events tables have a name like events_20240731.

To query this data, use the BigQuery Python client. This requires setting up and authenticating with the Google Cloud CLI.

Once done, we can then query events like this:

This returns a BigQuery RowIterator object.

3. Converting GA event data to the PostHog schema and capturing

The schema of Google Analytics' exported event data is similar to PostHog's schema, but it requires conversion to work with the rest of PostHog's data. You can see details on the Google Analytics schema in their docs and events and properties PostHog autocaptures in our docs.

For example, many PostHog events and properties are prepended with the $ character.

To convert to PostHog's schema, we need to:

Convert the

event_namevalues likeuser_engagementto$autocaptureandpage_viewto$pageview.Convert the

event_timestampto ISO 8601.Flatten the event data by pulling useful data out of records like

event_paramsanditemsas well as dictionaries likedeviceandgeo.Create an event properties by looping through Google Analytics event data, dropping irrelevant data, converting some to PostHog's schema, and including data unique to Google Analytics or custom properties.

Do the same conversion with person properties. These are then added to the

$setproperty.

Once this is done, you can capture events into PostHog using the Python SDK with historical_migration set to true.

Here's an example version of a full Python script that gets data from BigQuery, converts it to PostHog's schema, and captures it in PostHog.

This script may need modification depending on the structure of your Google Analytics data, but it gives you a start.